Model Re-use

DL Course

Instructor: Hasan A. Poonawala, Joseph Kershaw

Mechanical and Aerospace Engineering

University of Kentucky, Lexington, KY, USA

Overview

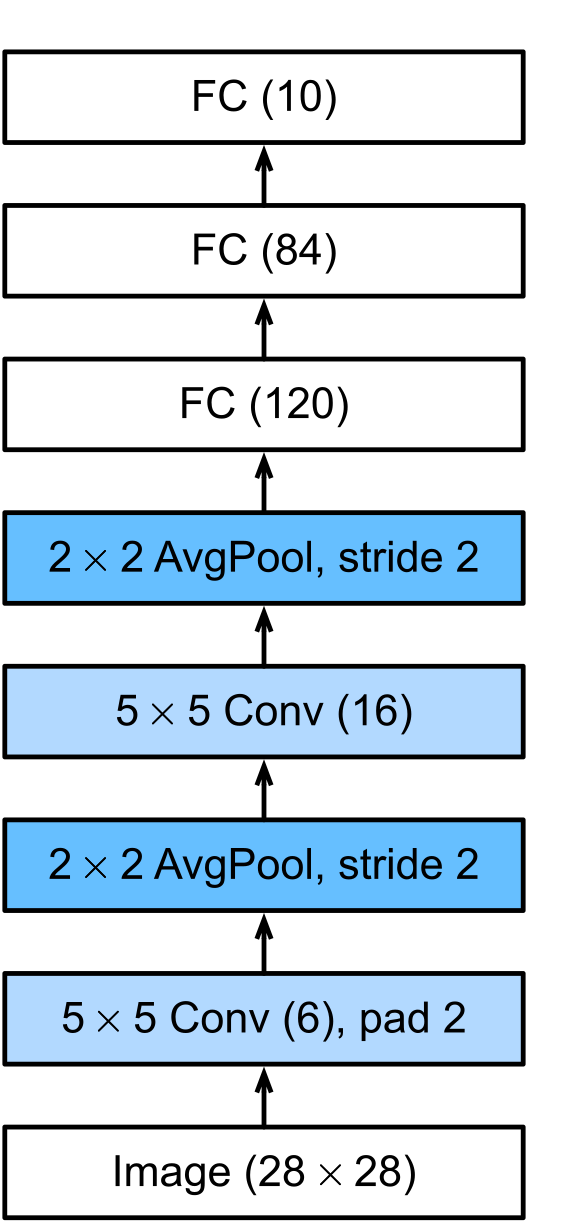

Build LeNet

Finetune or Train ResNet50 on CIFAR10

- Resnet50 was trained on ImageNet (1000 classes)

- Can you retrain it for the smaller CIFAR10 dataset?

- 10 classes, 6000 images each: airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

LeNet

- A batch of input data has size

- What is the size of the tensor after each block? Write them out.

LeNet <folder>/models.py

Task: implement LeNet in PyTorch

LeNet <folder>/data.py

import torch

from torchvision import datasets, transforms

def load_MNIST(batch_size=64):

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

train_dataset = datasets.MNIST('data', train=True, download=True, transform=transform)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_dataset = datasets.MNIST('data', train=False, download=True, transform=transform)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=1000, shuffle=False)

test_plot_loader = torch.utils.data.DataLoader(test_dataset, batch_size=1, shuffle=True)

return train_dataset, train_loader, test_dataset, test_loader, test_plot_loader

def eval_MNIST(model,test_loader):

correct = 0

with torch.no_grad():

for data, target in test_loader:

output = model(data)

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

print('\nTest set: Accuracy: {}/{} ({:.0f}%)\n'.format(

correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))LeNet <folder>/test_leNet.py

import torch

import torch.nn.functional as F

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

from data import load_MNIST, eval_MNIST

from models_live import LeNet

_, _, test_dataset, test_loader, test_plot_loader = load_MNIST()

model = LeNet()

eval_MNIST(model,test_loader)

PATH = './mnist_model_cnn.pth'

model.load_state_dict(torch.load(PATH))

eval_MNIST(model,test_loader)Overview

Build LeNet

Finetune or Train ResNet50 on CIFAR10

- Resnet50 was trained on ImageNet (1000 classes)

- Can you retrain it for the smaller CIFAR10 dataset?

- 10 classes, 6000 images each: airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

CIFAR10

ResNet50

Try this

Download code/resnets/data.py and run this:

import torch

import torchvision

import torchvision.transforms as transforms

import torchvision.models as models

import os

from data import load_cifar, test_cifar, show_cifar

if __name__ == "__main__":

#1. Load Data

transform = transforms.Compose(

[ transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

classes, trainset, trainloader, testset, testloader = load_cifar(transform,batch_size=16)

device = torch.device("cpu")

print(f'Using {device} for inference')

#2. Load Model

## Try this direct model

model = models.resnet50(pretrained=True)

model.to(device)

#5. Evaluate

print('Accuracy before training')

test_cifar(model,testloader,device,classes)

show_cifar(model,testloader,device,classes)Customization

code/resnets/models.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

class ResNetCIFAR(nn.Module):

def __init__(self):

super().__init__()

resnet50 = models.resnet50(pretrained=True)

resnet50.fc = torch.nn.Linear(resnet50.fc.in_features, 10)

torch.nn.init.xavier_uniform_(resnet50.fc.weight)

self.resnet50 = resnet50

def forward(self,x):

return self.resnet50(x)

class ResNet18CIFAR(nn.Module):

def __init__(self):

super().__init__()

resnet18 = models.resnet18(pretrained=True)

resnet18.fc = torch.nn.Linear(resnet18.fc.in_features, 10)

torch.nn.init.xavier_uniform_(resnet18.fc.weight)

self.resnet18 = resnet18

def forward(self,x):

return self.resnet18(x)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 100, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(100, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = torch.flatten(x, 1) # flatten all dimensions except batch

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

class Interpolate(nn.Module):

def __init__(self, scale_factor, mode):

super().__init__()

self.interp = nn.functional.interpolate

self.scale_factor = scale_factor

self.mode = mode

def forward(self, x):

x = self.interp(x, scale_factor=self.scale_factor, mode=self.mode, align_corners=False)

return x

class ResNetInt(nn.Module):

def __init__(self):

super().__init__()

self.de_layer3 = Interpolate(scale_factor=7, mode='bilinear')

self.resnet50 = models.resnet18(pretrained=True)

self.resnet50.fc = nn.Linear(self.resnet50.fc.in_features, 10)

nn.init.xavier_uniform_(self.resnet50.fc.weight)

self.decoder = nn.Sequential(

self.de_layer3,

self.resnet50

)

def forward(self, x):

return self.decoder(x)

Finetune Resnet50

code/resnets/finetune_resnet50_cifar10.py

## Trains a model-mod where the final layer output size is reduced for CIFAR10, and CIFAR10 images are resized to 224x224.

## We only train the fc layer

## Based on https://pytorch.org/hub/nvidia_deeplearningexamples_model/

## Modified by Hasan Poonawala

import torch

from PIL import Image

import torchvision

import torchvision.transforms as transforms

import numpy as np

import json

import requests

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

import os

from data import load_cifar, test_cifar, show_cifar

from utils import imshow

from models import ResNetCIFAR

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

if __name__ == "__main__":

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:256mb"

torch.cuda.empty_cache()

#1. Load Data

transform = transforms.Compose(

[torchvision.transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

classes, trainset, trainloader, testset, testloader = load_cifar(transform,batch_size=16)

device = torch.device("mps") if torch.mps.is_available() else torch.device("cpu")

print(f'Using {device} for inference')

# PATH = 'cifar_model_v3.pth'

# model.load_state_dict(torch.load(PATH))

model = ResNetCIFAR()

model.to(device)

print('Accuracy before training')

test_cifar(model,testloader,device,classes)

show_cifar(model,testloader,device,classes)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.resnet50.fc.parameters(), lr=0.0001, momentum=0.9)

print('Start training')

model.train()

for epoch in range(30): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

# inputs, labels = data

inputs, labels = data[0].to(device), data[1].to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 200 == 199: # print every 2000 mini-batches

print(f'[{epoch + 1}, {i + 1:5d}] loss: {running_loss / 2000:.3f}')

running_loss = 0.0

print('Finished Training')

PATH = './cifar_model_v4.pth'

torch.save(model.state_dict(), PATH)

print('Accuracy after training')

test_cifar(model,testloader,device,classes)

show_cifar(model,testloader,device,classes)Interpolate

code/resnets/interpolate_resnet50_cifar10.py

## Trains a resnet50-mod where the final layer output size is reduced for CIFAR10, and an interpolate layer accounts for input image size diff . As a result, batch size on homepc was 8, not 2 when using resize.

## full net is trained

## Based on https://pytorch.org/hub/nvidia_deeplearningexamples_resnet50/

## Modified by Hasan Poonawala

import torch

from PIL import Image

import torchvision

import torchvision.transforms as transforms

import numpy as np

import json

import requests

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

import os

from data import load_cifar, test_cifar, show_cifar

from utils import imshow

from models import ResNetInt

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

if __name__ == "__main__":

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:256mb"

torch.cuda.empty_cache()

#1. Load Data

transform = transforms.Compose(

[ transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

classes, trainset, trainloader, testset, testloader = load_cifar(transform,batch_size=8)

device = torch.device("mps") if torch.mps.is_available() else torch.device("cpu")

print(f'Using {device} for inference')

# PATH = 'cifar_model_v4.pth'

# model.load_state_dict(torch.load(PATH))

model = ResNetInt()

model.to(device)

print('Accuracy before training')

test_cifar(model,testloader,device,classes)

show_cifar(model,testloader,device,classes)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.resnet50.fc.parameters(), lr=0.0001, momentum=0.9)

print('Start training')

model.train()

for epoch in range(2): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

# inputs, labels = data

inputs, labels = data[0].to(device), data[1].to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 200 == 199: # print every 2000 mini-batches

print(f'[{epoch + 1}, {i + 1:5d}] loss: {running_loss / 2000:.3f}')

running_loss = 0.0

print('Finished Training')

PATH = './cifar_model_v5.pth'

torch.save(model.state_dict(), PATH)

print('Accuracy after training')

test_cifar(model,testloader,device,classes)

show_cifar(model,testloader,device,classes)Interpolate

code/resnets/models.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

class ResNetCIFAR(nn.Module):

def __init__(self):

super().__init__()

resnet50 = models.resnet50(pretrained=True)

resnet50.fc = torch.nn.Linear(resnet50.fc.in_features, 10)

torch.nn.init.xavier_uniform_(resnet50.fc.weight)

self.resnet50 = resnet50

def forward(self,x):

return self.resnet50(x)

class ResNet18CIFAR(nn.Module):

def __init__(self):

super().__init__()

resnet18 = models.resnet18(pretrained=True)

resnet18.fc = torch.nn.Linear(resnet18.fc.in_features, 10)

torch.nn.init.xavier_uniform_(resnet18.fc.weight)

self.resnet18 = resnet18

def forward(self,x):

return self.resnet18(x)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 100, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(100, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = torch.flatten(x, 1) # flatten all dimensions except batch

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

class Interpolate(nn.Module):

def __init__(self, scale_factor, mode):

super().__init__()

self.interp = nn.functional.interpolate

self.scale_factor = scale_factor

self.mode = mode

def forward(self, x):

x = self.interp(x, scale_factor=self.scale_factor, mode=self.mode, align_corners=False)

return x

class ResNetInt(nn.Module):

def __init__(self):

super().__init__()

self.de_layer3 = Interpolate(scale_factor=7, mode='bilinear')

self.resnet50 = models.resnet18(pretrained=True)

self.resnet50.fc = nn.Linear(self.resnet50.fc.in_features, 10)

nn.init.xavier_uniform_(self.resnet50.fc.weight)

self.decoder = nn.Sequential(

self.de_layer3,

self.resnet50

)

def forward(self, x):

return self.decoder(x)

LeResNet

From the paper

DL Course • Home