ME/AER 647 Systems Optimization I

Linear Programming

Instructor: Hasan A. Poonawala

Mechanical and Aerospace Engineering

University of Kentucky, Lexington, KY, USA

Topics:

Equality Constraints

Inequality Constraints

KKT Conditions

Introduction

Standard Form

Linear Program (LP)

An LP is an optimization problem in which the objective function is linear in the unknowns and the constraints consist of linear (in)equalities.

Standard form

- are column vectors, a fat matrix (), a column vector.

- ’s, ’s and ’s are fixed real constants, and the ’s are real numbers to be determined.

- We assume that each equation has been multiplied by minus unity, if necessary, so that each .

Conversion to Standard Form: Slack Variables

This problem may be alternatively expressed as

- The new nonnegative variables , convert inequalities to equalities are called slack variables.

- The constrant matrix now is transformed to .

Conversion to Standard Form: Surplus Variables

- If the linear equalities are reversed so that a typical inequality is

it is clear that this is equivalent to

with .

Variables, such as , adjoined in this fashion to convert a “greater than or equal to” inequality to equality are called surplus variables.

By suitably multiplying by minus unity, and adjoining slack and surplus variables, any set of linear inequalities can be converted to standard form.

Conversion to Standard Form: Free variables – First Method

Suppose one or more of the unknown variables is not required to be nonnegative, say, is not present so that is free to take on either positive or negative values.

- We then write , and require that .

- We substitute for everywhere.

- The problem is then expressed in terms of the variables .

- There is a certain degree of redundancy introduced in this technique since a constant added to and does not change .

- Nevertheless, the simplex method can still be used to find the solution.

Conversion to Standard Form: Free variables – Second Method

- Take the same free variable situation, i.e, is free to take on positive or negative values.

- One can take any one of the equality constraints which has a nonzero coefficient for , say, for example,

where .

- Then can be expressed as a linear combination of the other variables, plus a constant.

- This expression can be substituted everywhere for and we are led to a new problem of exactly the same form but expressed in terms of the variables only.

- Furthermore, the equation, used to determine is now identically zero and it too can be eliminated.

- We obtain a linear program having variables and constraint equations.

Example – Specific Case

is free, so we can solve for it from the first constraint, obtaining

Substituting this into the objective and the second constraint, we obtain the equivalent problem

which is a problem in standard form.

After the smaller problem is solved (what is the answer?) the value for can be found from Equation 1.

Examples of Linear Programming Problems

Example 1 – The Diet Problem

Determine the most economical diet that satisfies the basic minimum nutritional requirements for good health

- There are available different foods.

- There are basic nutritional ingredients,

- Each unit of food contains units of the nutrient.

- food sells at a price per unit.

- Each individual must receive at least units of the nutrient per day.

If we denote by the number of units of food in the diet, the problem is to select ’s to minimize the total cost

subject to the nutritional constraints

and the nonnegative constraints on the food quantities.

This problem can be converted to standard form by subtracting a nonnegative surplus variable from the left side of each of the linear inequalities.

Example 2– The Resource-Allocation Problem

- A facility is capable of manufacturing different products.

- Each product can be produced at any level , .

- Each unit of the product needs units of the resource, .

- Each product may require various amounts of different resources.

- Each unit of the product can sell for dollars.

- Each , describe the available quantities of the resources.

We wish to manufacture products at maximum revenue

subject to the resource constraints

and the nonnegativity consraints on all production variables.

- The problem can also be interpreted as

- fund different activities, where

- is the full reward from the activity,

- is restricted to , representing the funding level from to .

Example 3 – The Transportation Problem

- Quantities of a certain product are to be shipped from locations.

- Shipping a unit of product from origin to destination costs .

- These products will be received in amounts of at each of destinations.

- We want to determine the amounts to be shipped between each origin-destination pair ; .

| | | |||||

| | | |||||

| | | |||||

| | | |||||

| —— | —— | —— | —— | ||

- The row in this array defines the variables associated with the origin.

- The column defines the variables associated with the destination.

- Problem: find the nonnegative variables so that the sum across the row is , the sum down the column is , and the weighted sum is minimized.

Example 4 – The Maximal Flow Problem

Maximal flow problem

Determine the maximal flow that can be established in such a network.

where for those no-arc pairs .

- Capacitated network in which two special nodes, called the source (node 1); and the sink (node ) are distinguished.

- All other nodes must satisfy the conservation requirement: net flow into these nodes must be zero.

- the source may have a net outflow,

- the sink may have a net inflow.

- The outlow of the source will equal the inflow of the sink.

Example 5 – A Supply-Chain Problem

A warehouse is buying and selling stock of a certain commodity in order to maximize profit over a certain length of time.

- Warehouse has a fixed capacity .

- The price, , of the commodity is known to fluctuate over a number of time periods, say months, indexed by .

- The warehouse is originally empty and is required to be empty at the end of the last period.

- There is a cost per unit of holding stock for one period.

- In any period the same price holds for both purchase and sale.

- : level of stock in the warehouse at the beginning of period , : amount bought during this period, : amount sold during this period.

where is a slack variable.

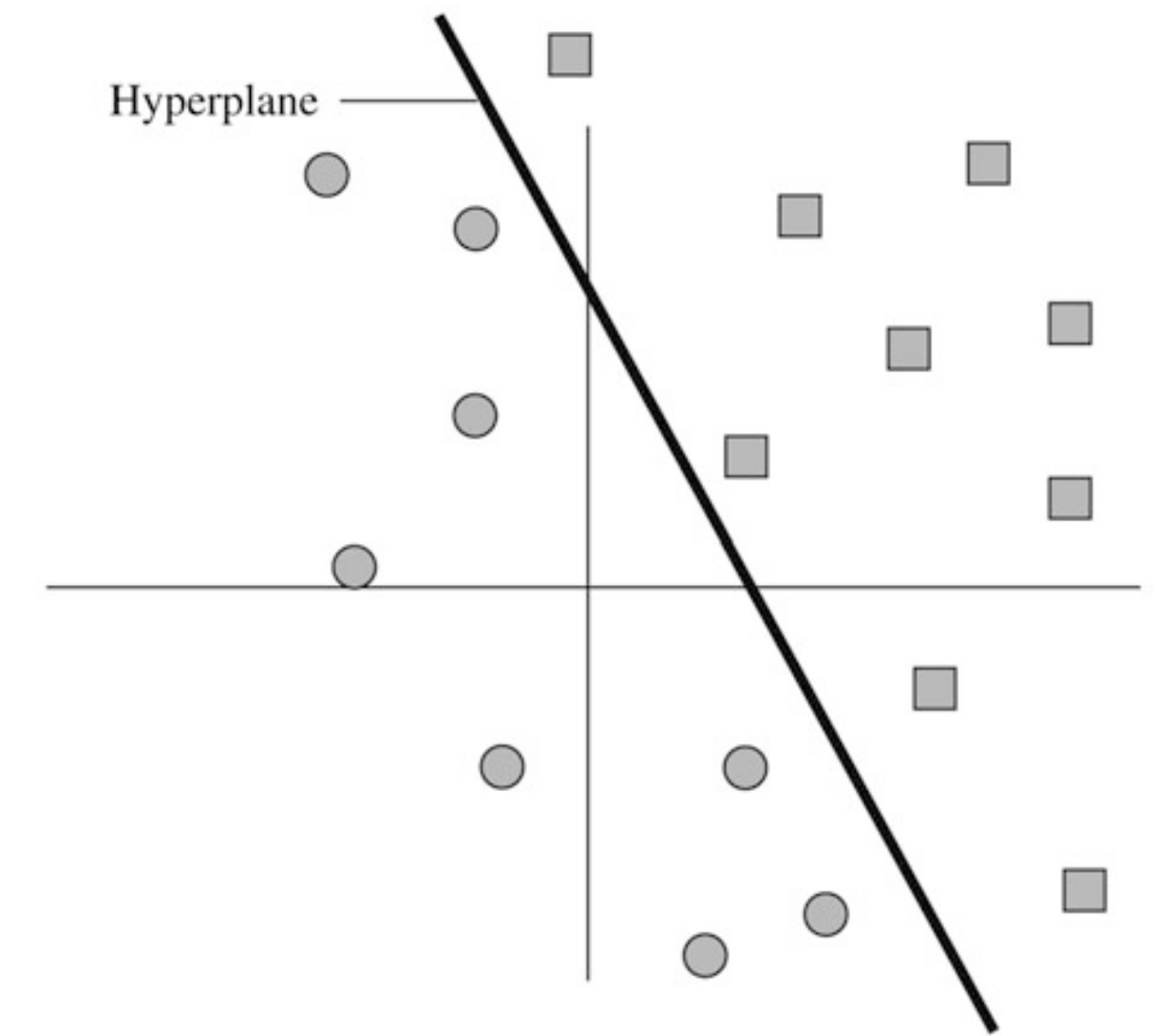

Example 6 – Linear Classifier and Support Vector Machine

-dimensional data points are to be classified into two distinct classes.

- In general, we have vectors for and vector for .

- We wish to find a hyperplane that separates ’s from the ’s, i.e., find a slope-vector and an intercept such that

where is the desired hyperplane.

- The separation is defined by the fixed margins and , which could be made soft or variable later.

Example

- Two-dimensional data points may be grade averages in science and humanities for different students.

- We also know the academic major of each student, as being science or humanities, which serves as the classification.

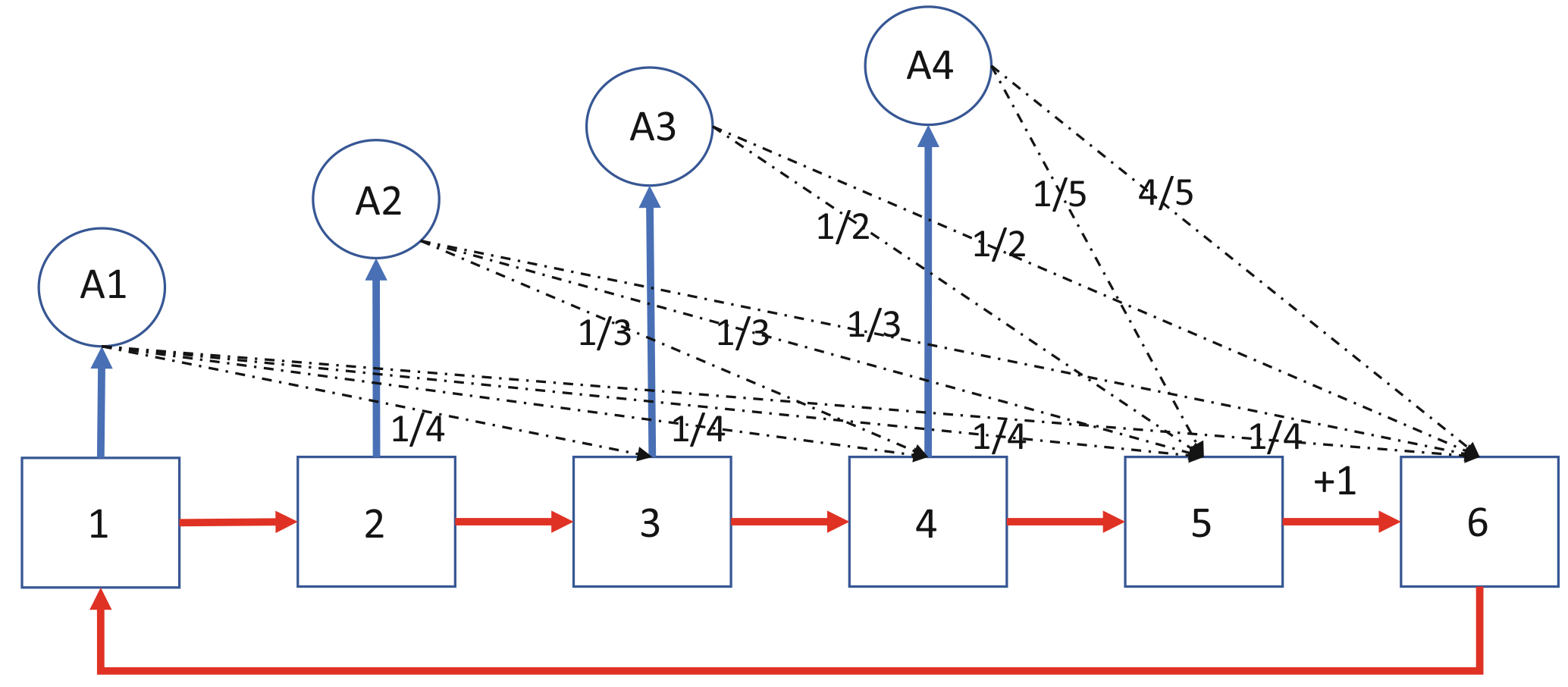

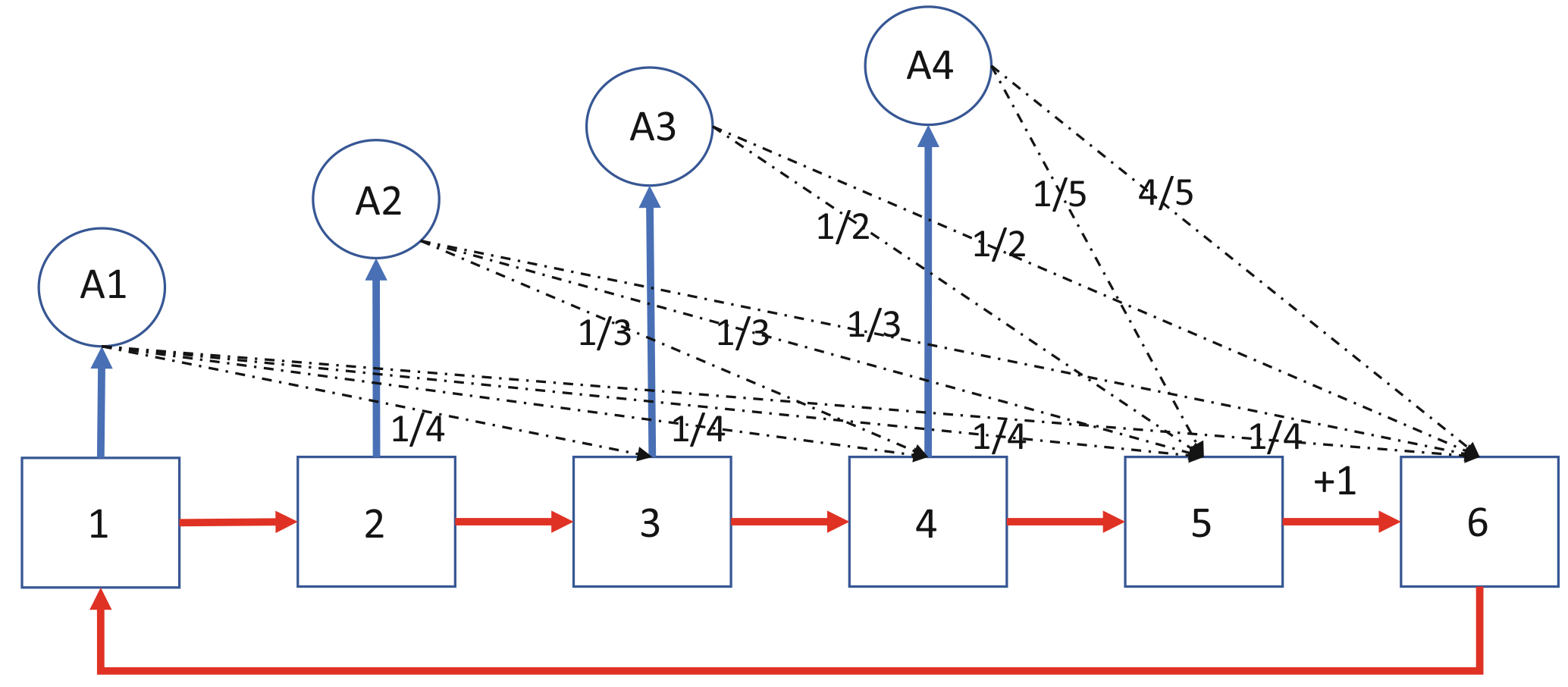

Example 7 – Markov Decision Process (MDP)

Markov Decision Process

An MDP problem is defined by a finite number of states, indexed by , where each state has a set of a finite number of actions, , to take.

- Each action, say , is associated with

- an immediate cost of taking,

- a probability distribution to transfer to all possible states at the next time period.

- A stationary policy for the decision maker is a function that specifies a single action in every state, .

MDP Problem

Find a stationary policy to minimize or maximize the discounted sum of expected costs or rewards over the infinite time horizon with a discount factor , when the process starts from state :

Example 7 – Markov Decision Process (MDP)

Maze Runner Game

Each square represents a state, where each of states has two possible actions to take:

- red action: moves to the next state at the next time period,

- blue action: shortcut move, with a probability distribution, to a state at the next time period.

Each state of has only one action

- moving to state (Exit state),

- moving to state (Start state).

All actions have zero cost, except state ’s (Trap state) action, which has a -unit cost to get out.

Suppose that the game is played infinitely, what is the optimal policy? That is, which action is best to take for every state at any time, to minimize the present-expected total cost?

MDP Problem

Two constraints for the two actions of State

Only constraint for the single action of State

Example 7 – Markov Decision Process (MDP)

- Let , represent the optimal present-expected cost when the process starts at state and time

- also called the cost-to-go value of state .

- The ’s follow Bellman’s principle of optimality:

where is the immediate cost of taking action at the current time period, and is the optimal expected cost from the next time period, and then on.

- When is known for every state, the optimal action in each state would be

Example 7 – Markov Decision Process (MDP)

- One observes that is a fixed-point of the Bellman operator, and it can be computed by the following linear program.

Basically, we relax the “” operator to “” from Bellman’s principle and make them into the constraints and then maximize the sum of ’s as the objective.

When the objective is maximized, at least one ineqality constraint in must become equal for every state so that is a fixed point solution of the Bellman operator.

Basic Feasible Solutions

Basic Solutions

System of equalities

- From the columns of , we select a set of linearly independent columns.

- WLOG, assume that the first columns of are selected: denote the matrix determined by these columns by .

- The matrix is nonsingular and we may uniquely solve the equation

- We refer to as a basis, since consists of linearly independent columns that can be regarded as a basis for .

Definition

Given the set of simultaneous linear equations unknowns Equation 2, let be any nonsingular submatrix made up of columns of . Then, if all components of not associated with columns of are set equal to zero, the solution to the resulting set of equations is said to be a basic solution to Equation 2 with respect to basis .

Definition

The components of associated with the columns of , denoted by the subvector according to the same column index order in , are called basic variables.

Full-Rank Assumption

The matrix has , and the rows of are linearly independent.

Basic Feasible Solutions

Definition

If one or more of the basic variables in a basic solution has value zero, that solution is said to be a degenerate basic solution.

- In a nondegenerate basic solution, the basic variables, and hence the basis , can be immediately identified from the positive components of the solution.

- There is ambiguity associated with a degenerate basic solution – some of the nonbasic variables can be interchanged!

Definition

A vector satisfying

is said to be feasible for these constraints. A feasible solution to the constraints Equation 3 that is also basic is said to be a basic feasible solution; if this solution is also a degenerate basic solution, it is called a degenerate basic feasible solution.

The Fundamental Theorem of Linear Programming

The Fundamental Theorem of LP

Corresponding to a linear program in standard form

a feasible solution to the constraints that achieves the minimum value of the objective function subject to those constraints is said to be an optimal feasible solution. If this solution is basic, it is an optimal basic feasible solution.

Fundamental Theorem

Given a linear program in standard form Equation 4 where is an matrix of rank ,

- if there is a feasible solution, there is a basic feasible solution;

- if there is an optimal feasible solution, there is an optimal basic feasible solution.

Proof (1)

Denote the columns of by . Suppose is a feasible solution. Then, in terms of the columns of , this solution satisfies

The Fundamental Theorem of LP

Proof (1) - Continued -

Assume that exactly of the variables are greater than zero, and wlog, that they are the first variables. Thus

There are now two cases, corresponding as to whether the set is linearly independent or linearly dependent.

Case 1: Assume are linearly independent. Then clearly, . If , the solution is basic and the proof is complete. If , then, since has rank , vectors can be found from the remaining vectors so that the resulting set of vectors is linearly independent. Assigning the value zero to the corresponding variables yields a (degenerate) basic feasible solution.

Case 2: Assume are linearly dependent. Then there is a nontrivial linear combination of these vectors that is zero. Thus there are constants , at least one of which can be assumed to be positive, such that

Multiplying this equation by a scalar and subtracting it from Equation 5, we obtain

This equation holds for every , and for each the components correspond to a solution of the linear equalities — although they may violate . Denoting , we see that for any

is a solution to the equalities. For , this reduces to the original feasible solution.

The Fundamental Theorem of LP

Proof (1) - Continued -

As is increased from zero, the various components increase, decrease, or remain constant, depending upon whether the correspoding is negative, positive, or zero. Since we assume at least one is positive, at least one component will decrease as is increased. we increase to the first point where one or more components become zero. Specifically, we set

For this value of , the solution given by Equation 8 is feasible and has at most positive variables. Repeating this process as necessary, we can eliminate positive variables until we have a feasible solution with corresponding columns that are linearly independent. At that point Case 1 applies.

Proof (2)

Let be an optimal feasible solution and, as in the proof of (1) above, suppose there are exactly positive variables . Again, there are two cases; and Case 1, corresponding to the linear independence is exactly the same as before.

Case 2 also goes exactly the same as before, but it must be shown that for any the solution Equation 8 is optimal. To show this, note that the value of the solution is

For sufficiently small in magnitude, is a feasible solution for positive or negative values of . Thus, we conclude that (why?).

Proof (2)

Let be an optimal feasible solution and, as in the proof of (1) above, suppose there are exactly positive variables . Again, there are two cases; and Case 1, corresponding to the linear independence is exactly the same as before.

Case 2 also goes exactly the same as before, but it must be shown that for any the solution Equation 8 is optimal. To show this, note that the value of the solution is

For sufficiently small in magnitude, is a feasible solution for positive or negative values of . Thus, we conclude that . For, if , an of small magnitude and proper sign could be determined so as to render Equation 10 smaller than while maintaining feasibility.

The Fundamental Theorem of LP

Part (1) of the theorem is commonly referred to as Carathéodory’s theorem.

Part (2) of the theorem reduces the task of solving a linear program to that of searching over basic feasible solutions.

Since for a problem having variables and constraints there are at most

basic solutions (corresponding to the number of ways of selecting of columns), there are only a finite number of possibilities.

Thus the fundamental theorem yields an obvious, but terribly inefficient, finite search technique.

By expanding upon the technique of proof as well as the statement of the fundamental theorem, the efficient simplex procedure is derived.

Relations to Convex Geometry

Extreme Points

Definition

A point in a convex set is said to be an extreme point of if there are no two distinct points and in such that for some , .

- An extreme point is thus a point that does not lie strictly within a line segment connecting two other points of the set.

Theorem (Equivalence of Extreme Points and Basic Solutions).

Let be an matrix of rank and an -vector. Let be the convex polytope consisting of all -vectors satisfying

A vector is an extreme point of if and only if is a basic feasible solution to Equation 11.

Proof

Suppose first that is a basic feasible solution to Equation 11. Then

where , the first columns of are linearly independent. Suppose that could be expressed as a convex combination of two other points in K; say, , , .

Extreme Points

Proof

Since all components of , , are nonnegative and since , it follows immediately that the last components of and are zero. Thus, in particular, we have

and

Since the vectors are linearly independent, however, it follows that and hence is an extreme point of .

Conversely, assume that is an extreme point of . Let us assume that the nonzero components of are the first components. Then

To show that is a basic feasible solution (BFS) it must be shown that the vectors are linearly independent. We do this by contradiction. Suppose they are linearly dependent. Then there is a nontrivial linear combination that is zero:

Define the -vector . Since , , it is possible to select such that

We then have which expresses as a convex combination of two distinct vectors in .

Extreme Points

Corollary

If the convex set corresponding to Equation 11 is nonempty, it has at least one extreme point.

Corollary

If there is a finite optimal solution to a linear programming problem, there is a finite optimal solution which is an extreme point of the constraint set.

Corollary

The constraint set corresponding to Equation 11 possesses at most a finite number of extreme points and each of them is finite.

Corollary

If the convex polytope corresponding to Equation 11 is bounded, then is a convex polyhedron, that is, consists of points that are convex combinations of a finite number of points.

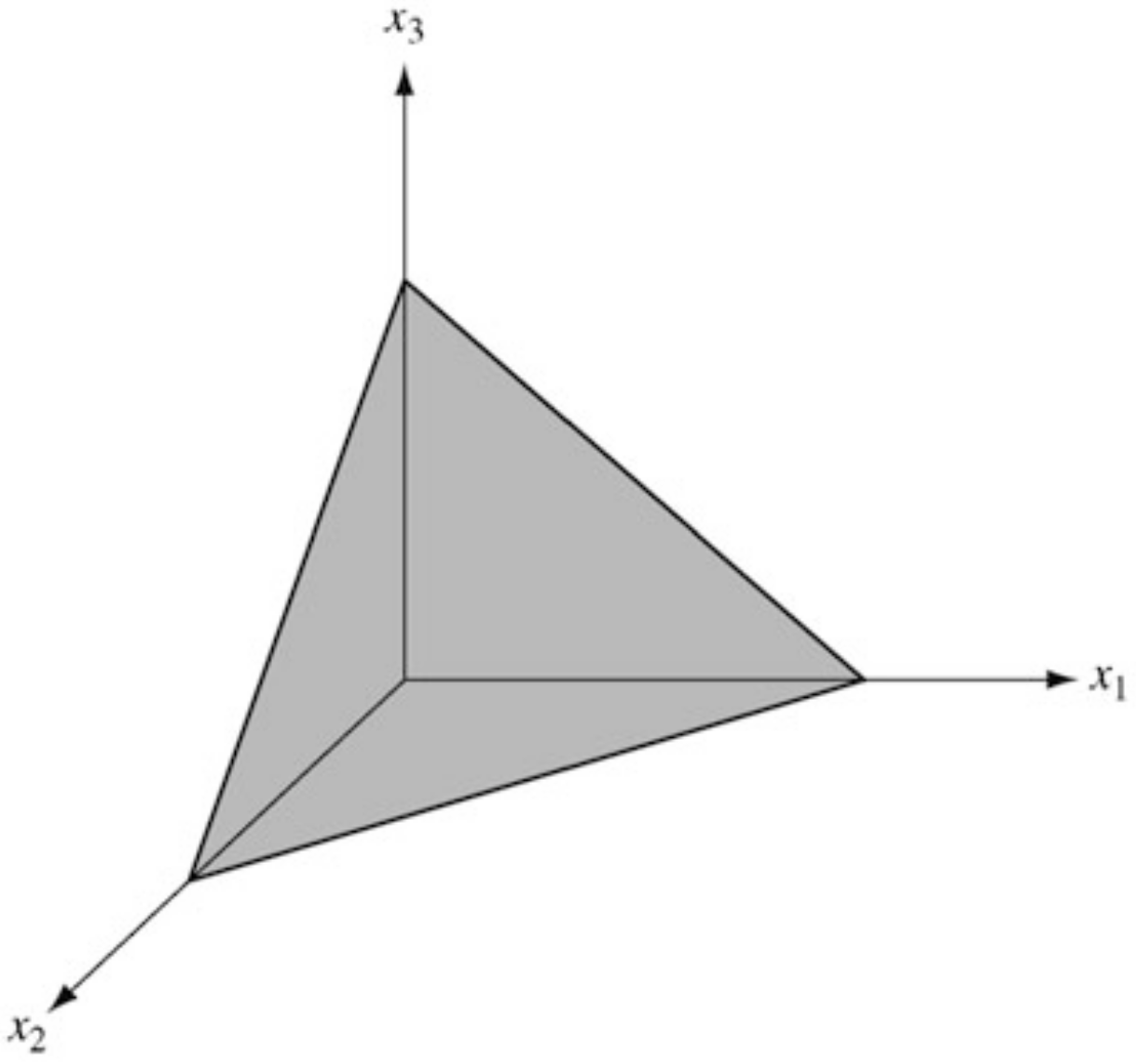

Convex Geometry – Examples

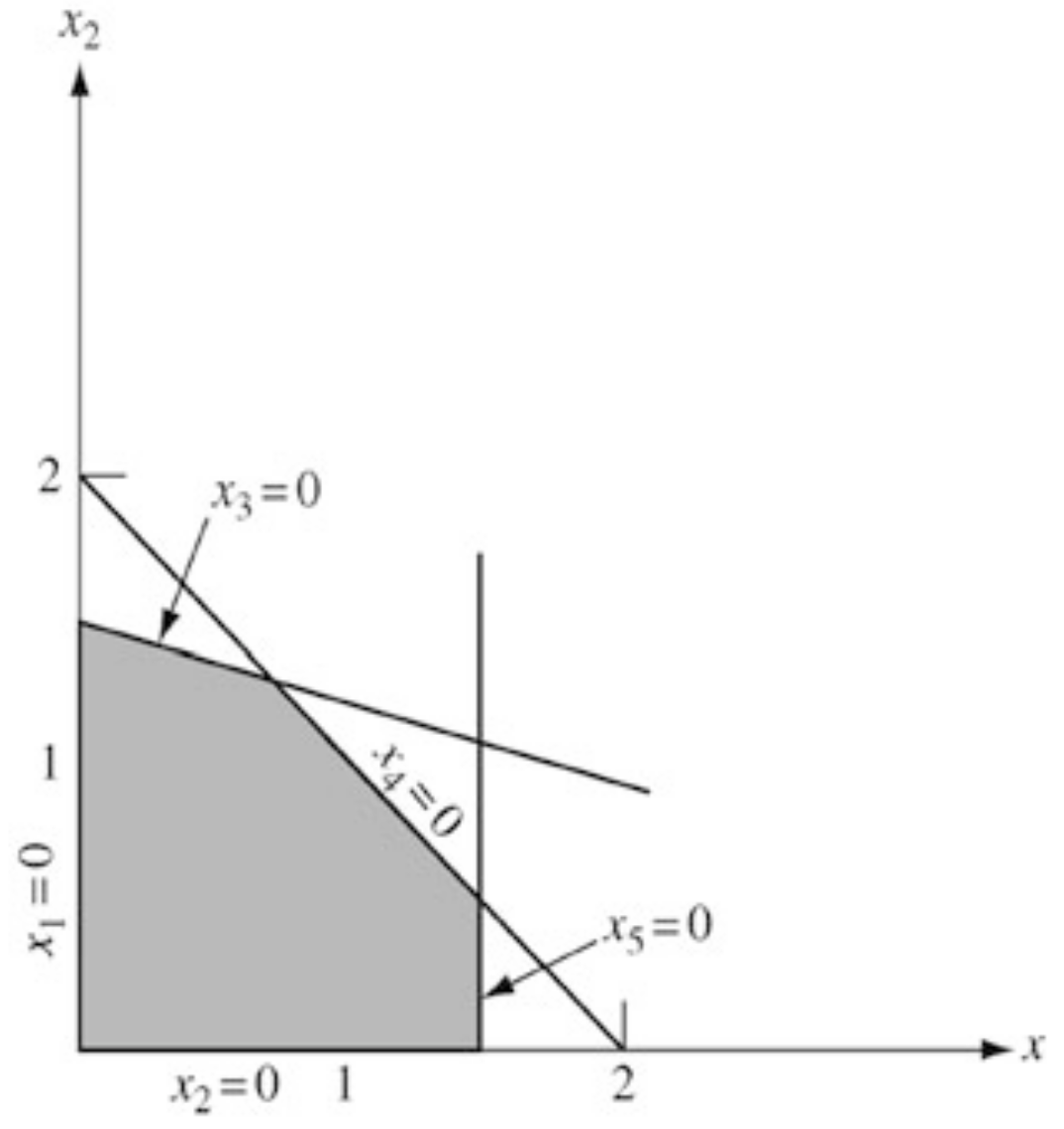

Consider the constraint set in defined by

- This set is illustrated in the figure.

- It has three extreme points, corresponding to the three basic solutions to .

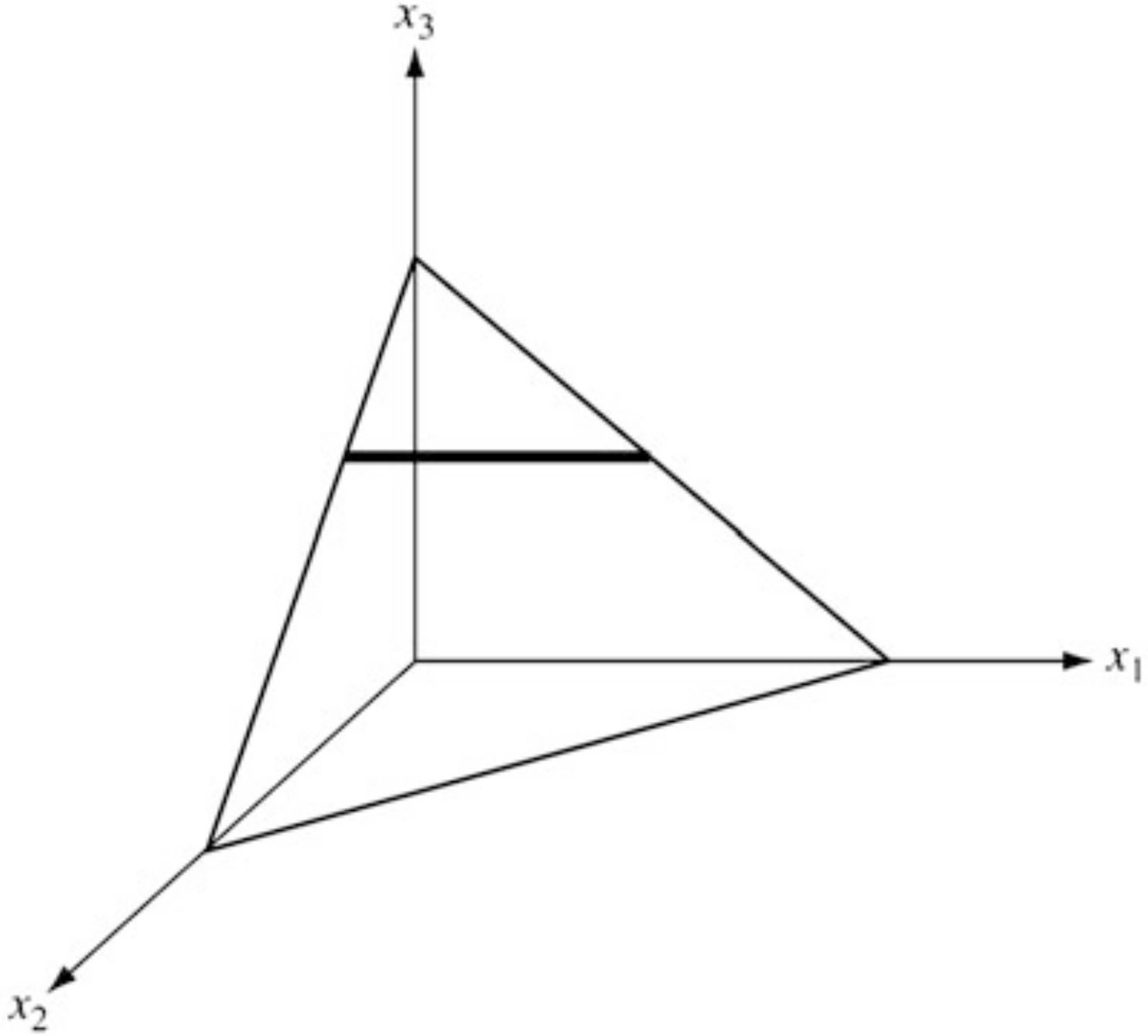

Consider the constraint set in defined by

- This set is illustrated in the figure.

- It has two extreme points, corresponding to the two basic feasible solutions.

- Note that the system of equations itself has three basic solutions: the first of which is not feasible.

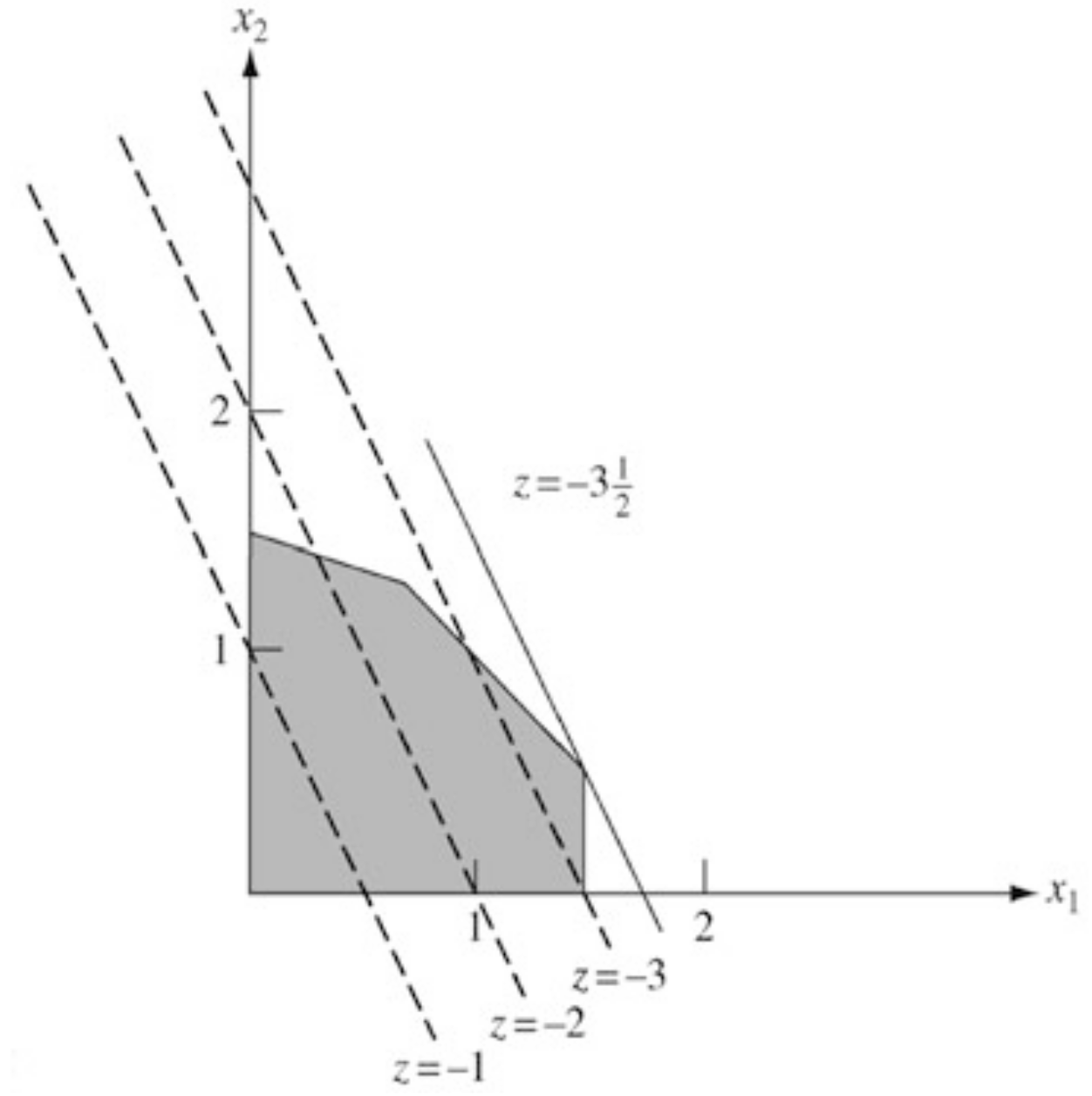

Convex Geometry – Examples

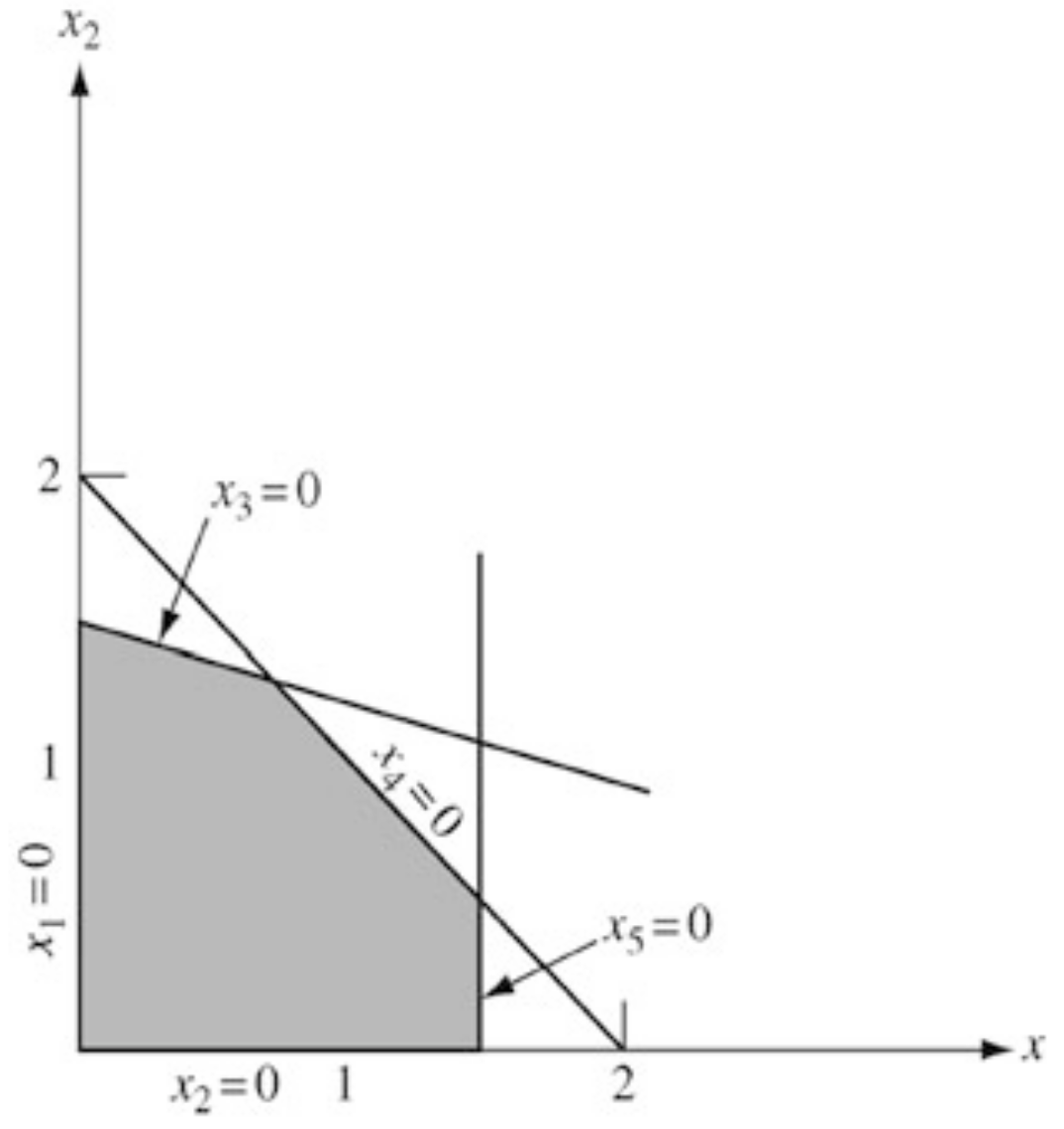

Consider the constraint set in defined by

- The set has five extreme points.

- In order to compare this example, we must introduce slack variables to yield the equivalent set in .

- A basic solution for this system is obtained by setting any two variables to zero and solving for the remaining three.

A basic solution for this system is obtained by setting any two variables to zero and solving for the remaining three.

As indicated in the figure, each edge of the figure corresponds to one variable being zero, and the extreme points are the points where two variables are zero.

Even when not expressed in the standard form, the extreme points of the set defined by the constraints of a lienar program correspond to the possible solution points.

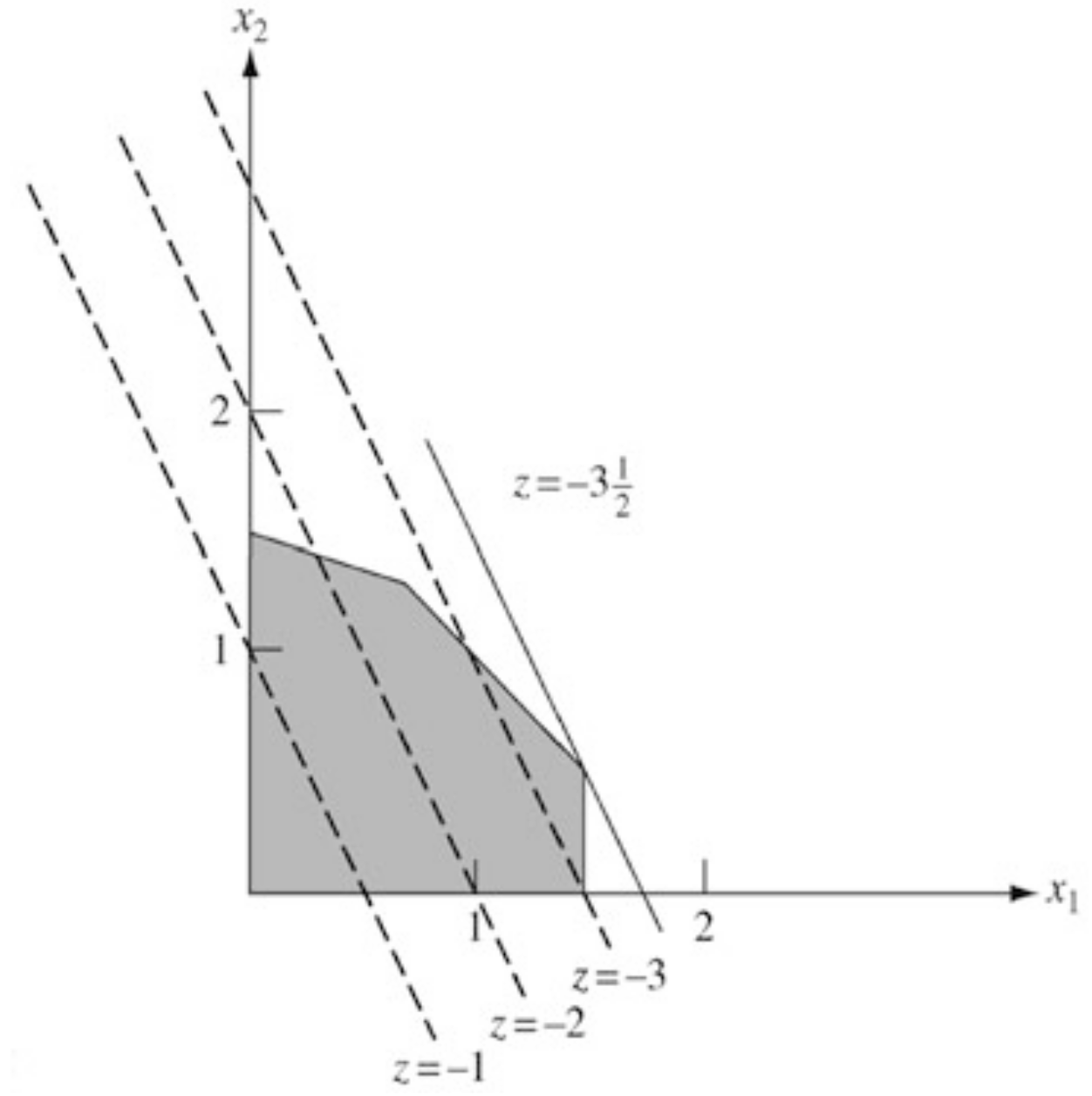

The level sets of an objective function is included in the bottom figure.

- As the level varies, different parallel lines are obtained.

- The optimal value of the linear program is the smallest value of this level for which the corresponding line has a point in common with the feasible set.

- In the figure, this occurs at the point with the level .

Farkas’s Lemma and Alternative Systems

(In)feasibility Certificates

Theorem (Farkas’s Lemma).

Let be an matrix and be an -vector. The system of constraints has a feasible solution if and only if the system of constraints has no feasible solution . Therefore a single feasible solution for system Equation 13 establishes an infeasibility certificate for the system Equation 12.

- The two systems, Equation 12 and Equation 13, are called alternative systems: one of them is feasible and the other is infeasible.

Example 1

Suppose . Then, is feasible for system Equation 13, which proves that the system Equation 12 is infeasible.

(In)feasibility Certificates

Lemma

Let be the cone generated by the columns of matrix , that is Then C is a closed and convex set.

Proof (of Farkas’s Lemma).

Let the system Equation 12 have a feasible solution, say . Then, the system Equation 13 must be infeasible, since, otherwise, we have a contradiction

from and .

Now, let the system Equation 12 have no feasible solution, that is, . We now prove that its alternative system Equation 13 must have a feasible solution.

Since points is not in and is a closed convex set, by the separating hyperplane theorem, there is a such that But we know that for some , so we have Setting , we have from inequality Equation 14.

(In)feasibility Certificates

Proof (of Farkas’s Lemma) - Continued -

Furthermore, inequality Equation 14 also implies . Since otherwise, say the first entry of , , is positive. We can then choose a vector such that

Then, from this choice, we have

This tends to as . This is a contradiction because should be bounded from above by inequality Equation 14. Therefore, identified in the separating hyperplane theorem is a feasible solution to system Equation 13. Finally, we can always scale such that .

Geometric Interpretation

If is not in the closed and convex cone generated by the columns of the matrix , then there must be a hyperplane separating and the cone, and the feasible solution to the alternative system is the slope-vector of the hyperplane.

Variant of Farkas’s Lemma

Corollary

Let be an matrix and an -vector. The system of constraints

has a feasible solution if and only if the system of constraints

has no feasible solution . Therefore a single feasible solution for system Equation 16 establishes an infeasibility certificate for the system Equation 15.

Adjacent Basic Feasible Solutions (Extreme Points)

Adjacent Solutions

We know that it is only necessary to consider basic feasible solutions to the system when solving a linear program.

The idea of the simplex method is to move from a basic feasible solution (extreme point) to an adjacent one with an improved objective value.

Definition

Two basic feasible solutions are said to be adjacent if and only if they differ by one basic variable.

- A new basic solution can be generated from an old one by replacing one current basic variable by a current nonbasic one.

- It is impossible to arbitrarily specify a pair of variables whose roles are to be interchanged and expect to maintain the nonnegativity condition.

- However, it is possible to arbitrarily specify which current nonbasic (entering or incoming) variable is to become basic and then determine which current basic (leaving or outgoing) variable should become nonbasic.

Determination of a Vector to Leave the Basis

- Let the BFS be partitioned as and .

- is a linear combination of columns of with the positive multipliers .

- Suppose we decided to bring into representation the (entering) column vector of , (), while keeping all others nonbasic.

- A new representation of as a linear combination of vectors ( added to the current basis ) for any nonnegative multiplier and :

- Since is the incoming variable, its value needs to be increased from the current to a positive value, say .

- As value increases, the current basic variable needs to be adjusted accordingly to keep the feasibility.

Determination of a Vector to Leave the Basis

- For , we have the old basic feasible solution .

- The values of will either increase or remain unchanged if ; or decrease linearly as is increased if .

- For small enough , Equation 19 gives a feasible but nonbasic solution.

- If any decrease, we may set equal to the value corresponding to the first place where one (or more) of the value vanishes

- We have a new BFS, with the vector replacing (outgoing) column , where index corresponds to the minimizing-ratio in Equation 21

If none of the ’s are positive, then all coefficients in Equation 19 increase (or remain constant) as is increased, and no new basic feasible solution is obtained.

In this case the set of feasible solutions to Equation 17 is unbounded.

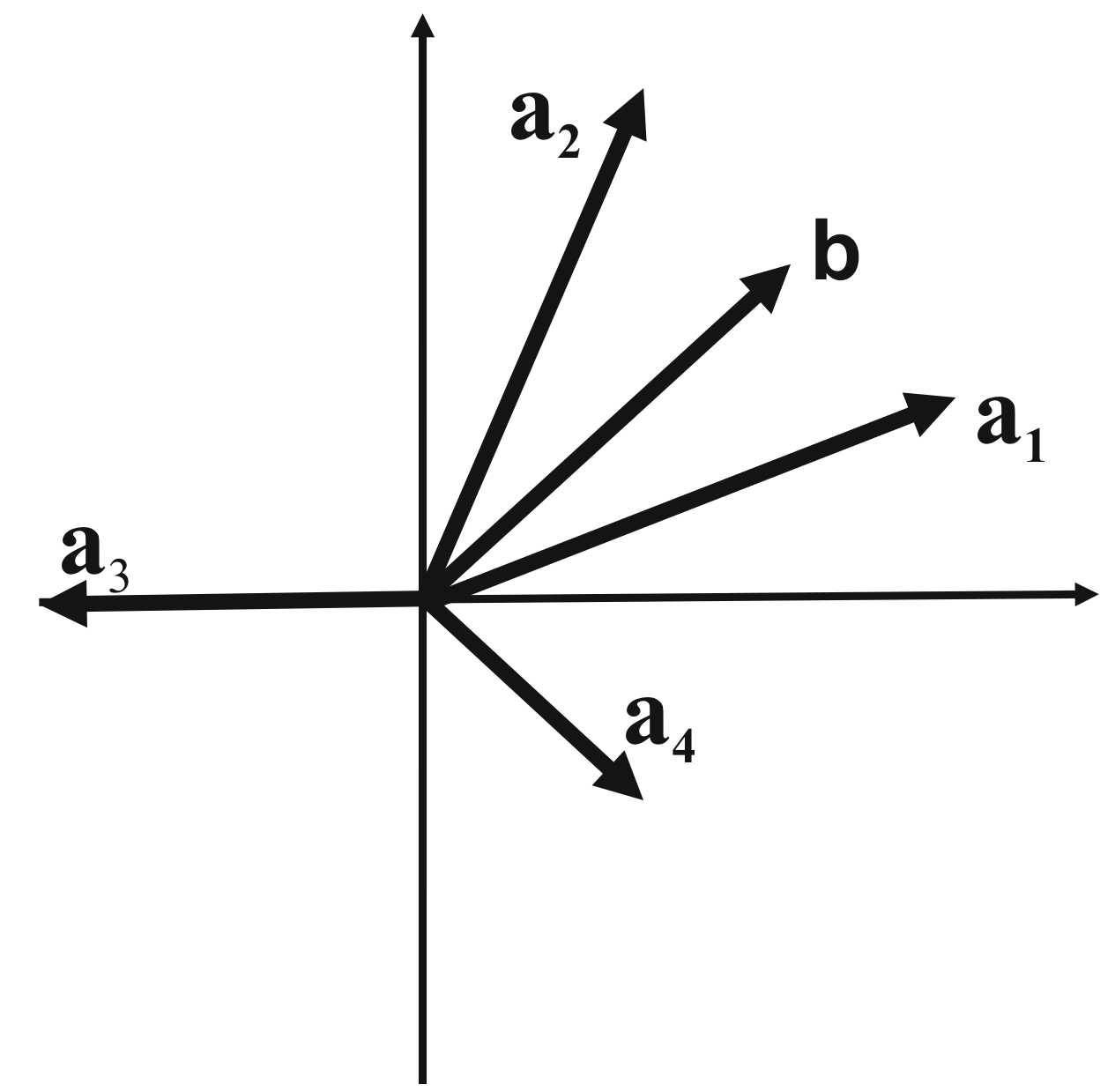

Conic Combination Interpretations

- The basis transformation can be represented in the requirements space, the space where columns of and are represented.

- A feasible solution defines a representation of as a conic combination of the ’s.

- A BFS will only use positive weights.

- Suppose we start with and as the initial basis.

- Then an adjacent basis is found by bringing in some other vector.

- If is brought in, then clearly must go out (why?).

- On the other hand, if is brought in, must go out (why?).

- A BFS can be constructed with positive weights on and because lies between them.

- A BFS cannot be constructed with positive weights on and .

- Another interpretation is in the activity space, the space where lives.

- Here, the feasible region is shown directly as a convex set, and BFS are extreme points.

- Adjacent extreme points are points that lie on a common edge.

Conic Combination Interpretations – Example

Example (Basis Change Illustration)

- Suppose we start with and as the initial basis and select as the incoming column. Then

From Equation 21, and is the outgoing column so that the new basis is formed by and .

Now, suppose we start with and as the initial basis and select as the incoming column. Then

- Since the entries of the incoming column are all negative, in Equation 21 can go to , indicating that the feasible region is unbounded.

The Primal Simplex Method

Determining an Optimal Feasible Solution

The idea of the simplex method is to select the incoming column so that the resulting new BFS will yield a lower value to the objective function than the previous one.

- Assume that consists of the first columns of . Then partition and as

Suppose we have a basic feasible solution

The value of the objective function is

Hence for the current basic solution, the corresponding value is

- For any value of the necessary value of is determined from equality constraints of the linear program, i.e., from .

- When this expression is substituted into Equation 22 we obtain

Determining an Optimal Feasible Solution

Equation 25 expresses the cost of any feasible solution to Equation 17 in terms of the independent variable in .

Here, is the simplex multipliers or the shadow prices corresponding to basis .

- Let

- Then from formula Equation 25,

- The vector represents the relative cost vector, also called reduced cost or reduced gradient vector for nonbasic variables in .

- From formula Equation 27, we can now determine if there is any advantage in changing the basic solution by introducing one of the nonbasic variables.

- If is negative for some , , then increasing from zero to some positive value would decrease the total cost, yielding a better solution.

- Equation 27 automatically takes into account the changes that would be required in the values of the basic variables to accommodate for the change in .

Determining an Optimal Feasible Solution

Theorem (Improvement of Basic Feasible Solution)

Given a nondegenerate BFS with corresponding objective value , suppose that for some there holds . Then there is a feasible solution with objective value . If the column can be substituted for some vector in the original basis to yield a new basic feasible solution, this new solution will have . If cannot be substituted to yield a BFS then the solution set is unbounded and the objective function can be made arbitarily small (toward minus infinity).

- Final question: Does imply optimality?

- The answer is “yes” due to strong duality and the fact that

This means that

and strong duality forces that is optimal.

Optimality Condition Theorem

If for some basic feasible solution , then that solution is optimal.

Economic Interpretations – Diet Problem

Optimality

- We consider a certain food not in the basis – say carrots – and determine if it would be advantageous to bring it into the basis.

- This is easily determined by examining the cost of carrots as compared with the cost of synthetic carrots.

- Say carrots are food , whose unit cost is . The cost of a unit of synthetic carrots is

- If the reduced coefficient , it is advantageous to use real carrots in place of synthetic carrots, and carrots should be brought into the basis.

- In general, each can be thought of as the price of a unit of the column when constructed from the current basis.

- The difference between this synthetic price and the direct price of that column determines whether that column should enter the basis.

Diet Problem (Exact nutritional requirements)

- gives the nutritional equivalent of a unit of a particular food.

- Given a basis , say the first columns of , the corresponding shows how the nutritinal contents of any food can be constructed as a combination of the foods in the basis.

- For instance, if carrots are not in the basis we can, using the description given by the tableau, construct a synthetic carrot, which is nutritionally equivalent to a carrot, by an appropriate combination of the foods in the basis.

The Simplex Procedure

Step 0. Given the the inverse of a current basis, and the current solution .

Step 1. Calculate the current simplex multiplier vector and then calculate the relative cost coefficients . If stop; the current solution is optimal.

Step 2. Determine the vector that is to enter the basis by selecting its most negative cost coefficient, the () coefficient (break ties arbitrarily); and calculate .

Step 3. If , stop; the problem is unbounded. Otherwise, calculate the ratios for to determine the current basic column, where corresponds to the index of the minimum ratio, to leave the basis.

Step 4. Update (or its factorization) and the new basic feasible solution . Return to Step 1.

Remark

The basic columns in and the nonbasic columns in can be ordered arbitrarily and the components in follow the same index orders.

More precisely, let columns be permuted as and . Then when is identified as the most negative coefficient in in Step 2, is the entering column. Similarly, when is identified as the minimum ratio index in Step 3, is the outgoing column.

Example – Primal Simplex Procedure Illustration

Suppose we start with the initial basis and .

Step 0. Initialization

Step 1. Calculate

and

Step 2. Then see , that is, is the incoming column, and calculate

Step 3. Since the ratios are, via the component-wise divide operation

The minimum ratio corresponds to column () that would be outgoing. That is replaces in the basis, which is now and .

Example – Primal Simplex Procedure Illustration

Step 4. Update

Return to Step 1.

Second Iteration

Step 1. Calculate

and

Stop! All of the reduced costs are positive so the current basic feasible solution is optimal!

Finding an Initial Basic Feasible Solution

- The simplex procedure needs to start from a basic feasible solution.

- Such a BFS is some times immediately available:

- if the constraints are of this form , with

- a BFS to the corresponding standard form of the problem is provided by the slack variables.

- An initial BFS is not always apparent for other types of LPs.

- For those, an auxiliary LP and a corresponding application of the simplex method can be used to determined the required initial solution.

- An LP can always be expressed in the so-called Phase I form:

- In order to find a solution to Equation 28, we consider the artificial minimization problem (Phase One linear program)

where is a vector of artifical variables.

If there is a feasible solution to Equation 28, then it is clear that Equation 29 has a minimum value of zero with .

If Equation 28 has no feasible solution, then the minimum value of Equation 29 is greater than zero.

Equation 29 is an LP and the a BFS for it is . It can readily be solved using the simplex technique.

The Dual Simplex Method

Motivations

- Often there is a basis to an LP that is not feasible for the primal problem, but its simplex multiplier vector is feasible for the dual.

- That is and .

- Then we can apply the dual simplex method moving from the current solution to a new BFS with a better objective value.

- Assume consists of the first columns of .

- Define a new dual variable vector via an affine transformation

and substitute in the dual by

Transformed Dual – Variable

- is a BFS.

- If , i.e., the primal basic solution is also feasible, then is optimal.

- This implies is optimal to the original dual.

- The vector can be viewed as the scaled gradient vector of the dual objective function at basis .

- If one entry of , then one can decrease the variable to some while keeping others at ’s.

- The new remains feasible, but its objective value would increase linearly in .

- The second block of constraints in Equation 31 becomes

- To keep dual feasibility, we need to choose such that this vector constraint is satisfied componentwise.

- If all entries in are nonnegative, then may be chosen arbitrarily large so the dual objective is unbounded.

- If some are negative we can increase until one of the inequality constraints Equation 32 become equal.

- Say the becomes equal. This indicates that the current nonbasic column replaces in the new basis .

- The determination can be done by calculating the comp.-wise ratios for and (inc. col. ).

Dual Simplex Method

- In each cycle we find a new feasible dual solution such that one of the equalities becomes inequality and one of the inequalities becomes equality.

- At the same time we increase the value of the dual objective function.

- The equalities in the new solution then determine a new basis.

One difference, in contrast to the primal simplex method, is that here the outgoing column is selected first and the incoming one is chosen later.

Step 0. Given the inverse of a dual feasible basis , primal solution , dual feasible solution , and reduced cost vectors .

Step 1. If , stop; the current solution pair is optimal. Otherwise, determine which column is to leave the basis by selecting the most negative entry, the entry (break ties arbitrarily), in . Now calculate and then calculate .

Step 2. If , stop; the problem is unbounded. Otherwise, calculate the ratios for ( to determine the current nonbasic column, , corresponding to the minimum ratio index, to become basic.

Step 3. Update the basis (or its factorization), and update primal solution , dual feasible solution , and reduced cost vector accordingly. Return to Step 1.

Example – Dual Simplex Procedure Illustration

- We start with the initial basis and .

Step 0. Initialization

and

Step 1. We see that only the second component of is negative so that (which corr. to column ). Now, we compute

Example – Dual Simplex Procedure Illustration

Step 2. Since all components in are negative, the componentwise ratios are

Here, we see the minimum ratio is the first component so that (which corresponds to column ), that is replaces in the current basis.

Step 3. The new basis is .

and

Stop! The solution pair is optimal!

The Primal-Dual Algorithm

- We can work simultaneously on the primal and dual problems to solve LP problems.

- The procedure begins with a feasible solution to the dual that is improved at each step by optimizing an associated restricted primal problem.

- As the method progresses, it can be regarded as striving to achieve the complementary slackness conditions for optimality.

and

- Given a feasible solution , not necessarily basic, to the dual, define the subset of indices by if .

- Since is dual feasible, we have .

- Corresponding to and the index set , we define the associated restricted primal problem

The Primal-Dual Algorithm

- The dual of this associated restricted primal is called the associated restricted dual with dual variable vector .

Primal-Dual Optimality Theorem

Suppose that is feasible for the original dual and that and is feasible (and of course optimal) for the associated restricted primal. Then and are optimal for the original prime and dual programs, respectively.

Proof

Clearly is feasible for the primal. Also, we have because is identical to on the components corresponding to the nonzero elements of . Thus and optimality follows from strong duality or complementary slackness.

The Primal-Dual Algorithm

Step 1. Given a feasible solution to the dual program Equation 33, determine the associated restricted primal according to Equation 34.

Step 2. Optimize the associated restricted primal. If the minimal value of this problem is zero, the corresponding solution and is an optimal pair for the original LP Equation 33.

Step 3. If the minimal value of the associated restricted primal is strictly positive, the maximal objective value of the associated restricted dual Equation 35 is also positive from the strong duality theorem, that is, its optimal solution . If there is no for which for all , conclude the primal has no feasible solutions from Farkas’s lemma.

Step 4. If, on the other hand, for at least one , , define the new dual feasible vector where is referred to as the stepsize, is chosen as large as possible till one of the constraints, becomes equal

If can be increased to , then the original dual is unbounded. Otherwise and we go back to Step 1 using this new dual feasible solution whose dual objective is strictly increased.

Systems Optimization I • ME 647 Home