0.5199909210205078 msec elapsed for NM

[0.70710678 0.70710678]

Instructor: Hasan A. Poonawala

Mechanical and Aerospace Engineering

University of Kentucky, Lexington, KY, USA

Topics:

Dual function and problem

Examples

Local Duality

Sensitivity

Primal Optimization problem:

Introduce the Lagrangian associated with the problem, defined as

Let then

Take the infimum of both sides

Dual function

Lower bound property

for any dual feasible pair

Dual feasibility

The pair is dual feasible if and

Dual optimization problem

Dual optimization problem

Convexity of the dual

The dual problem is a convex optimization problem

Proof

By definition, is the pointwise infimum of affine functions of , and is therefore concave. is an affine constraint. Hence, the dual problem is maximization of a concave function with affine constraints, which is a convex optimization problem.

Let

Clearly,

For convex problems that satisfy appropriate conditions, like Slater’s conditions1,

Generally, the duality gap is , which is non-negative

When is feasible, the lower bound property can be rewritten as

and the supremum is achieved when

Therefore

By weak duality,

and under strong duality:

In turn, , where 1

We can simplify the problem to

Define

This function is convex in (but not in all variables), with minimum given by the FONC:

Therefore, the dual of the SVM problem is:

Eliminating , we get the following dual SVM problem:

Maximal flow problem

Determine the maximal flow that can be established in such a network.

where for those no-arc pairs .

Linear Program (LP)

An LP is an optimization problem in which the objective function is linear in the unknowns and the constraints consist of linear (in)equalities.

Symmetric form primal LP

Determine the most economical diet that satisfies the basic minimum nutritional requirements for good health

We want to minimize the total cost

subject to the nutritional constraints

and the nonnegative constraints on the food quantities.

We wish to manufacture products at maximum revenue

subject to the resource constraints

| Primal | Dual | |||

|---|---|---|---|---|

| subject to | subject to | |||

If is an matrix, then is an -vector, is an -vector, is an vector, and is an -vector.

The vector is the variable of the primal program, and is the variable of the dual program.

Important

The roles of the primal and the dual can be reversed!

Multiplying the objective and the constraints by minus unity, the dual has the structure of the primal.

Its corresponding dual will be equivalent to the original primal.

Primal LP (Standard Form LP)

Conversion to dual (Inequality Form LP)

Partition the dual vector as , we get

Letting , this is simplified as

The dual of the dual is the primal!

| Primal/Dual | Dual/Primal | |

|---|---|---|

| Obj. coef. vector | | | Right-hand-side |

| Right-hand-side | | | Obj. coef. vector |

| | | ||

| Max model | | | Min model |

| | | constraint sense: | |

| | | constraint sense: | |

| free | | | constraint sense: |

| constraint sense: | | | |

| constraint sense: | | | |

| constraint sense: | | | free |

Throughout this section, we consider the primal-dual pair

Weak Duality Lemma

If and are feasible for the primal-dual pair, then .

Proof

We have the last inequality being valid sincd and .

Corollary

If and are feasible for the primal-dual pair and if , then and are optimal for their respective problems.

Duality Theorem of LP

If either of the primal-dual pair problems has a finite optimal solution, so does the other, and the corresponding values of the objective functions are equal. If either problem has an unbounded objective, the other problem has no feasible solution.

Proof

Firstly, the second statement is an immediate consequence of weak duality. If the primal is unbounded and is feasible for the dual, we must have for arbitrarily large , which is clearly impossible.

Let us assume that the primal has a finite optimal solution and show that the dual has a solution with the same value (recall primal/dual are reversible). We prove that if the primal problem is feasible and its minimal value is bounded from below, then the system

has a feasible solution pair and . The first system in Equation 2 is the primal constraint system, the second is the dual constraint system, and the third is the reversed duality gap, which, together with weak duality, implies zero-duality gap .

Proof - Continued -

We first show that the dual must be feasible, since otherwise, from Farkas’s lemma the alternative system to the second system Equation 2 must be feasible, that is, there is such that . Let be any given feasible solution for the primal, then the solution must also be feasible for the primal for any scalar . But the primal objective value at this solution is which is unbounded from below as leading to a contradiction.

Now, both the primal and the dual are feasible but suppose their optimal values are not equal; that is, the whole system Equation 2 remains infeasible. Then its alternative system must be feasible. That is, there are to satisfy the constraints

Case 1: in Equation 3, then we have which is a contradiction.

Case 2: in Equation 3, then we let be any feasible solution for the primal and be any feasible solution for the dual. Again must also be feasible for the primal and must also be feasible for the dual, and the objective gap at this pair is which is not bounded below by as and creates a contradition to weak duality.

Diet Problem

Production Problem

Theorem

The minimal value function of the linear program Equation 1 is a convex function, and the optimal dual solution is a subgradient vector of the function at , written as .

Theorem

The minimal value function of the linear program Equation 1 is a convex function, and the optimal dual solution is a subgradient vector of the function at , written as .

Proof

Let and be two optimal solutions of Equation 1 corresponding to two right-hand-side vectors and , resp. Then, for any scalar , is a feasible solution of Equation 1 with so that the minimal value is

which implies the first claim.

Furthermore, let be the optimal dual solution with . Note that remains feasible for the dual of primal with because the dual feasible region is independent of changes in . Thus

which proves the second claim.

The Lagrange multipliers associated with a constrained minimization problem have an interpretation as prices, similar to the prices in LP.

Let a minimal solution be a regular point and be the corresponding Lagrange multiplier vector. Consider the family of problems

Sensitivity Theorem

Consider the family of problems Equation 4. Suppose that for every in a region containing , its minimizer is continuously differentiable depending on . Let with the corresponding Lagrange multiplier . Then

Sensitivity Theorem

Consider the family of problems Equation 4. Suppose that for every in a region containing , its minimizer is continuously differentiable depending on . Let with the corresponding Lagrange multiplier . Then

Proof

Using the chain rule and taking derivatives with respect to on both sides of

at , we have

On the other hand, using the chain rule and the first-order condition for and the above matrix equality

To solve the dual optimization problem, we need to solve

The dual function is defined using a constrained optimization problem:

Can we replace the inner constrained optimization with unconstrained local optimization?:

where

Local duality answers ‘yes’ in some cases.

Dual Function

First-Order Necessary Conditions

together with the constraint. These equations have a solution at The Hessian of the corresponding Lagrangian is Since this is positive definite, we conclude that the solution obtained is a local minimum (it is, in fact, a global minimum).

Local Duality

Since is positive definite, we can apply the local duality theory near this solution. which leads to valid for . It can be verified that has a local maximum at . Plugging this value back in Equation 8 and maximizing (unconstrained) over and yields the same maximizers as before.

Nonlinear Programming Problem

Everything we do can be easily extended to problems having inequality as well as equality constraints for the price of a somewhat more involved notation.

and the Hessian of the Lagrangian

must be positive semidefinite on the tangent subspace

Local Convexity Assumption

We assume that the Hessian is positive definite. (We mean that on the whole space , not just on the subspace .)

This assumption guarantees that the Lagrangian is locally convex at .

has a solution near when is near because is positive definite.

Thus, locally there is a unique correspondence between and through the solution of the unconstrained problem Equation 8.

Near we define the dual function by the equation

We are then able to show that locally the original constrained problem Equation 8 is equivalent to unconstrained local maximization of the dual function with respect to .

Lemma 1

The dual function has gradient

Proof

We have explicitly from Equation 10 Thus Since the first term on the right vanishes by the defition of (the unique solution to Equation 9), we obtain Equation 11.

Lemma 2

The Hessian of the dual function is

Proof

By Lemma 1, .

Differentiating with respect to , we obtain

Solving for and substituting back to the first equation, we are through.

Local Duality Theorem

Suppose that the problem has a local solution at with corresponding value and Lagrange multiplier . Suppose also that is a regular point of the constraints and that the corresponding Hessian of the Lagrangian is positive definite. Then the dual problem has a local solution at with corresponding value and as the point corresponding to in the definition of .

Proof

It is clear that corresponds to in the definition of . Now at we have by Lemma 1, and by Lemma 2, the Hessian of is negative definite. Thus satisfies the SOSC for an unconstrained maximum point of . The corresponding value is found from the definition of to be .

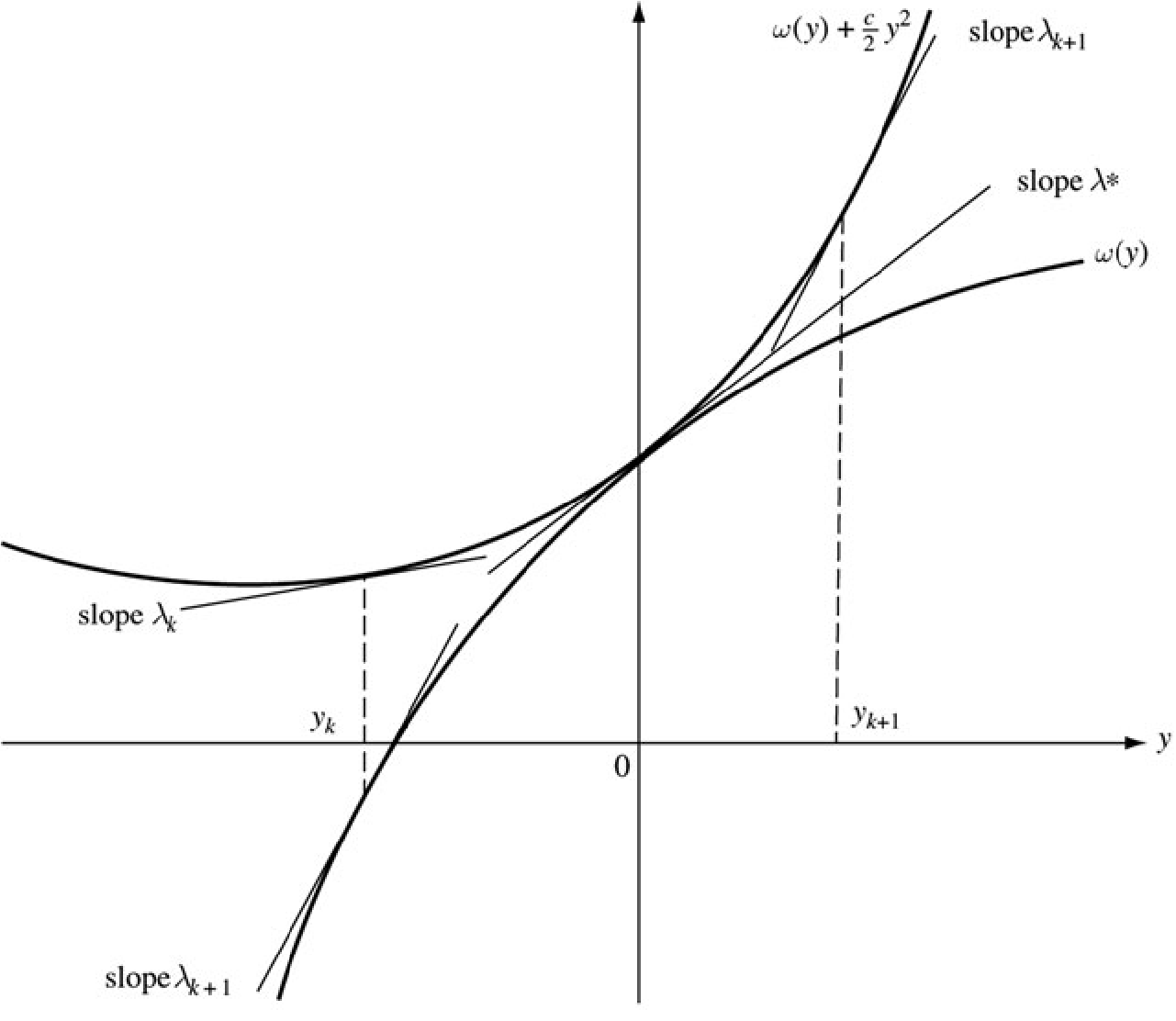

The modified dual optimization problem is which has the same optimum as the true dual optimization problem, under appropriate conditions.

From Lemma 1, the gradient at is given by the constraint functions evaluated at the optimum of

To solve the modified dual optimization problem we run the following iterations:

Here, is the first-order Lipschitz constant of the dual function .

0.5199909210205078 msec elapsed for NM

[0.70710678 0.70710678]

0.9369850158691406 msec elapsed for NM

[0.70710678 0.70710678]

Lemma

Let and be -by- symmetric matrices. Suppose that is positive semidefinite and is positive definite on the subspace . Then there is a such that for all the matrix is positive definite.

Proof

Suppose to the contrary that for every there were an with such that . The sequence must have a convergent subsequence converging to a limit . Now since , it follows that . It also follows that . However, this contradicts the hypothesis of the lemma.

This lemma applies to the Hessian of the augmented Lagrangian, evaluated at the optimal solution pair , .

This leads directly to the first basic result concerning augmented Lagrangian.

Proposition

Assume that the second-order sufficiency conditions for a local minimum are satisfied at , . Then there is a such that for all , the augmented Lagrangian has a local minimum point at .

where the minimization with respect to is taken to be locally near .

Primal function

where the minimum is understood to be taken locally near .

Then, the augmented Lagrangian function for Equation 14 would be

In contrast to the method of multipliers that we previously covered, the alternating direction method of multipliers (ADMM) is to (approximately) minimize in an alternative order:

Systems Optimization I • ME 647 Home